New AI-Generated ‘TikDocs’ Exploits Trust in the Medical Profession to Drive Sales

AI-generated medical scams across TikTok and Instagram, where deepfake avatars pose as healthcare professionals to promote unverified supplements and treatments.

These synthetic “doctors” exploit public trust in the medical field, often directing users to purchase products with exaggerated or entirely fabricated health claims.

With advancements in generative AI making deepfakes increasingly accessible, experts warn that such deceptive practices risk eroding confidence in legitimate health advice and endangering vulnerable populations.

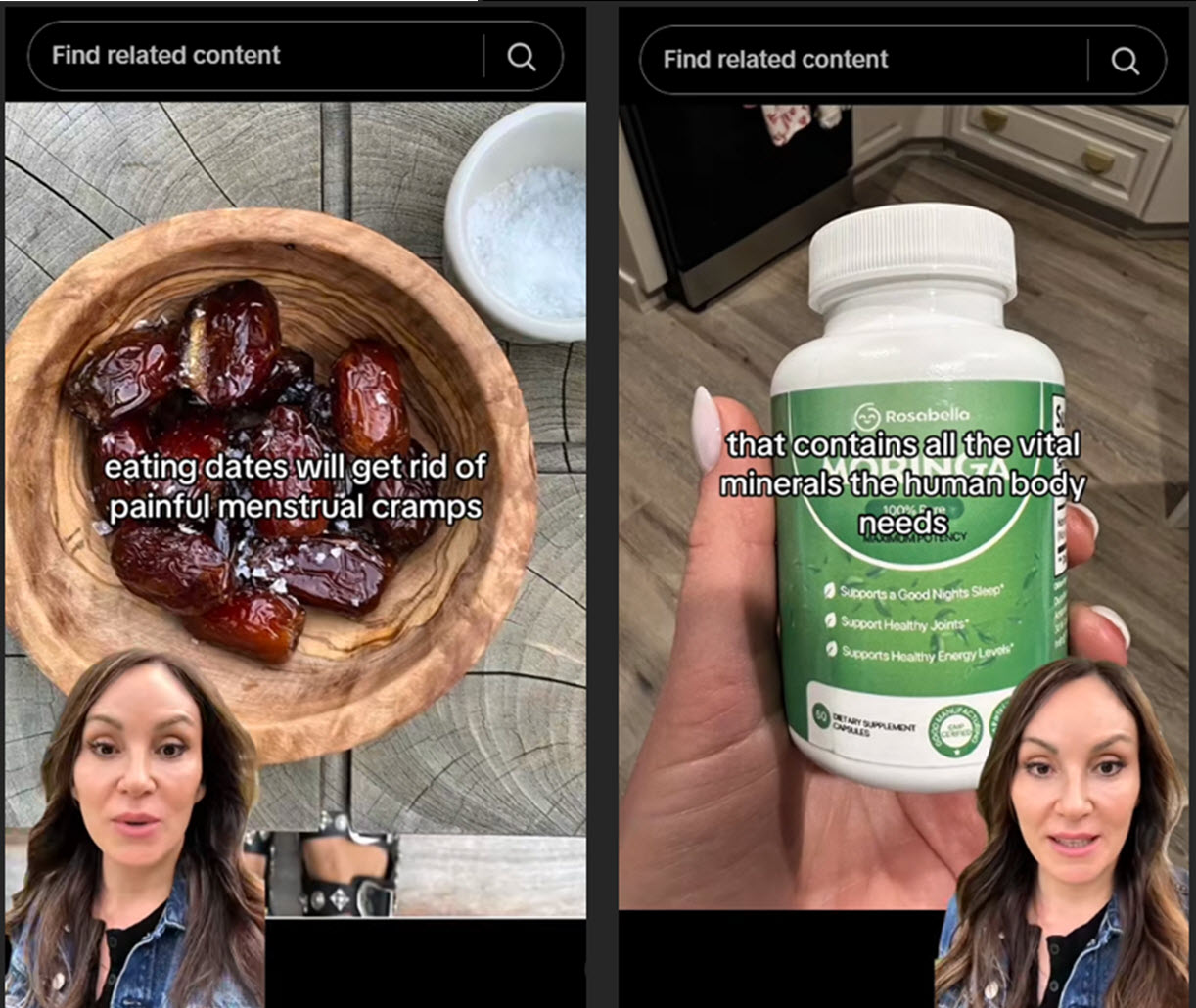

ESET researchers identified over 20 TikTok and Instagram accounts in Latin America using AI-generated avatars disguised as gynecologists, dietitians, and other specialists.

These avatars, often positioned in the corner of videos, deliver scripted endorsements for products ranging from “natural extracts” to unapproved pharmaceuticals.

For instance, one campaign promoted a supposed Ozempic alternative labeled as “relaxation drops” on Amazon-a product with no proven weight-loss benefits.

The videos leverage polished production quality and authoritative tones to mimic credible medical advice, blurring the line between education and advertisement.

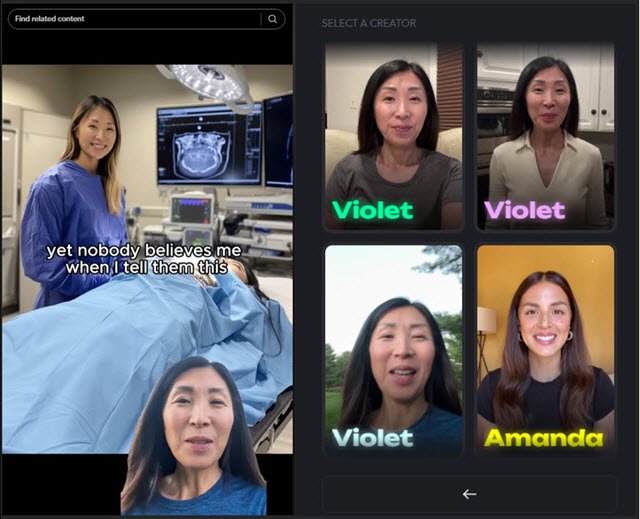

The technology behind these scams relies on legitimate AI tools designed for content creation.

Platforms offering avatar-generation services enable users to input minimal footage and produce lifelike videos, which scammers then repurpose for fraudulent campaigns.

This misuse highlights a critical vulnerability: while such tools empower creators, they lack robust safeguards to prevent malicious applications.

In some cases, deepfakes even hijack the likenesses of real doctors, as seen in UK campaigns impersonating TV figures like Dr. Michael Mosley.

Exploiting Trust in Healthcare Authority

Deepfake scams prey on the inherent trust placed in medical professionals. By framing sales pitches as expert recommendations, these videos sidestep skepticism typically directed at overt advertisements.

For example, a synthetic gynecologist with “13 years of experience” urged followers to purchase unregulated supplements, despite the account being linked to a generic avatar library.

Similarly, fake endorsements from celebrities like Tom Hanks have promoted “miracle cures,” capitalizing on their public personas to lend credibility to dubious products.

The consequences extend beyond financial loss. Victims may delay evidence-based treatments in favor of ineffective-or harmful-alternatives.

Researchers note that deepfakes promoting fake cancer therapies or unapproved drugs could exacerbate health disparities, particularly among populations with limited healthcare access.

Moreover, the proliferation of such content undermines trust in telehealth and online medical resources, which surged during the COVID-19 pandemic.

Detection and Mitigation Strategies

While AI-generated content grows more sophisticated, experts recommend vigilance through both technical scrutiny and policy reforms. Key red flags include:

- Mismatched lip movements that don’t sync with the audio.

- Robotic or overly polished vocal patterns.

- Visual glitches, such as blurred edges or sudden lighting shifts.

- Hyperbolic claims like “miracle cures” or “guaranteed results”.

- Accounts with few followers, minimal history, or inconsistent posting.

Social media users should scrutinize accounts promoting “doctor-approved” products, particularly new profiles with minimal followers or suspicious activity.

On a systemic level, advocates urge platforms to enforce stricter content moderation and labeling requirements for AI-generated media.

The EU’s Digital Services Act and proposed U.S. legislation like the Deepfakes Accountability Act aim to mandate transparency, though enforcement remains fragmented.

Technological solutions, such as AI-driven detection tools that analyze facial micro-expressions or voice cadence, are also being developed to flag synthetic content in real time.

Public education remains critical. Initiatives like New Mexico’s deepfake literacy campaigns and Australia’s telehealth guidelines emphasize verifying health claims through accredited sources like the WHO or peer-reviewed journals.

As ESET cybersecurity advisor Jake Moore notes, “Digital literacy is no longer optional-it’s a frontline defense against AI-driven exploitation.”

The escalation of deepfake medical scams underscores an urgent need for collaborative action.

While AI holds transformative potential for healthcare, its weaponization demands equally innovative countermeasures-from regulatory frameworks to public awareness-to safeguard both individual and collective well-being.